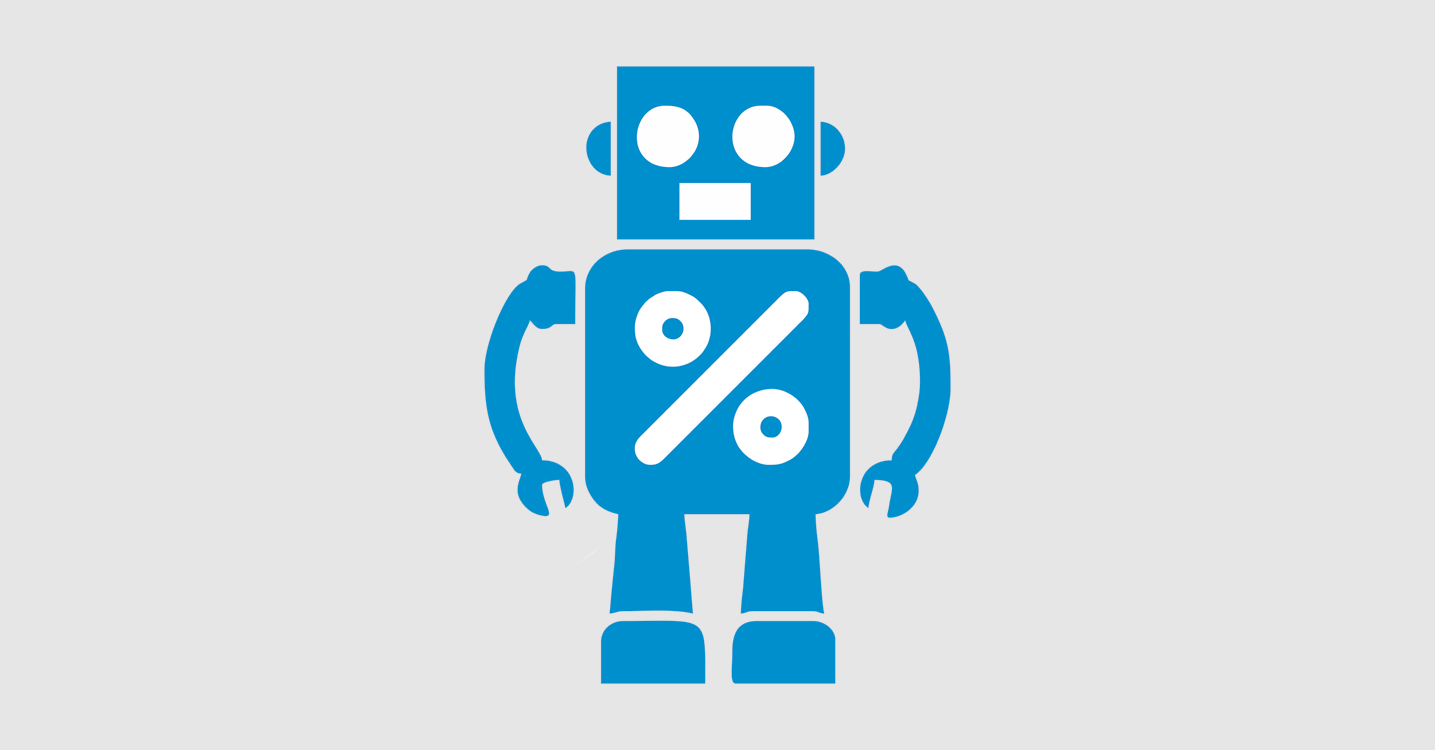

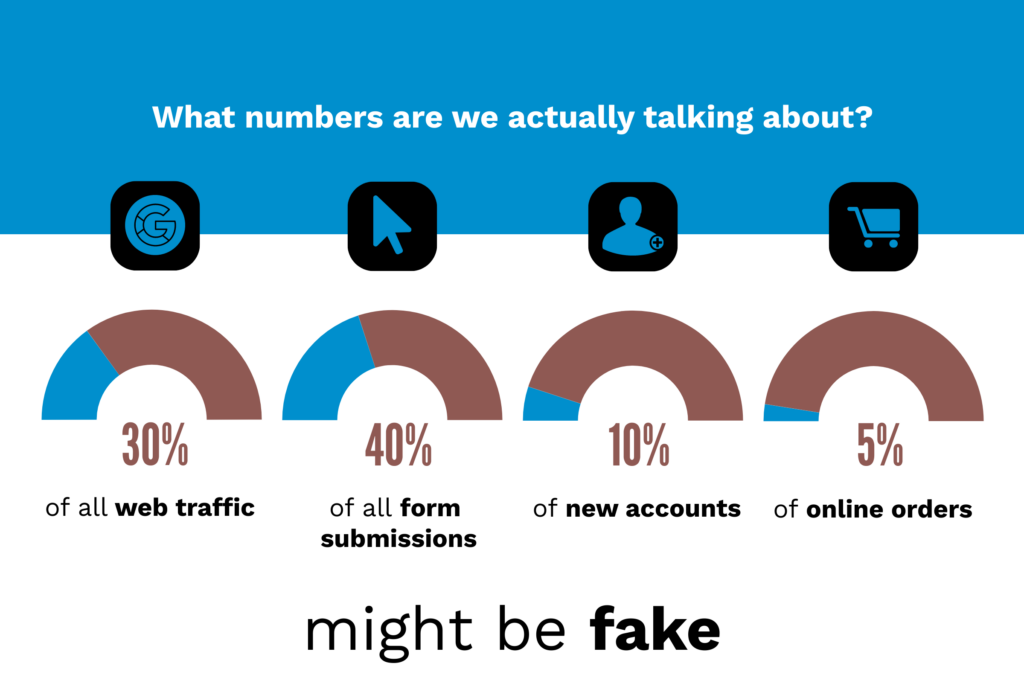

In today’s digital world, ensuring the security and integrity of your website is paramount. One crucial aspect of this is detecting and managing traffic from VPNs (Virtual Private Networks). VPNs can be used for legitimate purposes, but they are also frequently used by spammers and malicious actors to mask their identities. CleanTalk’s BlackLists Database offers a powerful tool for identifying and managing VPN traffic. This guide will walk you through the process of detecting VPN IPs using CleanTalk BlackLists Database.

Why is it Important to Detect VPN Traffic?

Detecting VPN traffic is essential for several reasons:

Enhanced Security: VPNs can be used by malicious actors to hide their true IP addresses, making it harder to track their activities. By identifying and managing VPN traffic, you can better protect your website from potential threats.

Spam and Hacking Prevention: Spammers often use VPNs to bypass IP-based spam filters. Detecting VPN traffic helps in reducing spam submissions and maintaining the quality of user interactions on your site.

Accurate Analytics: VPNs can skew your website analytics by masking the true geographic locations of visitors. Identifying VPN traffic helps in maintaining more accurate visitor data.

CleanTalk BlackLists Database for VPN and Malicious Traffic Detection

CleanTalk’s BlackLists Database provides a comprehensive resource for identifying VPNs, hosting services, and other potentially harmful network types. The database includes information on the type of network, spam frequency, and whether the IP has been involved in spam or malicious activities.

Here’s an example of a response from the CleanTalk API:

{

"data": {

"IP_ADDRESS": {

"domains_count": 0,

"domains_list": null,

"in_antispam_previous": 0,

"spam_frequency_24h": 0,

"spam_rate": 1,

"in_security": 1,

"country": "US",

"in_antispam": 1,

"frequency": 33,

"in_antispam_updated": "2024-07-28 05:40:40",

"updated": "2024-07-28 16:40:43",

"appears": 1,

"network_type": "hosting",

"submitted": "2022-08-22 00:15:40",

"sha256": "69b4bf5e24594462df40c591636ed9ad3438e8f2d6284069d0c71e8c0ee8a9ad"

}

}

}

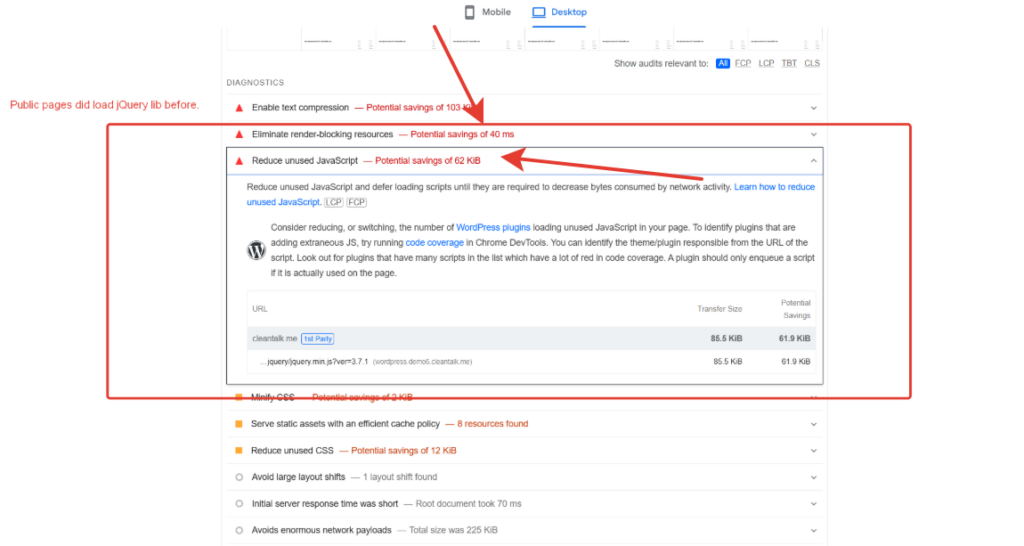

In this response, the network_type is “hosting,” which often overlaps with VPN. For TOR networks, network_type will be ”tor”. For more accurate detection of traffic from VPN addresses, we recommend using the parameter in our API “network_type”. For networks belonging to VPN services, it will have the value “network_type”: “paid_vpn”. However, we also recommend using the “hosting” network type for more accurate traffic detection.

Some examples of IP types:

https://cleantalk.org/blacklists/85.192.161.161 IP type is not indefined

https://cleantalk.org/blacklists/67.223.118.81 IP is belongs to the hosting network type

https://cleantalk.org/blacklists/109.70.100.1 IP is belongs to the network TOR type

https://cleantalk.org/blacklists/66.249.64.25 IP is belongs to the network type good_bots (this is Google search bot).

Accessing CleanTalk BlackLists Database

You can access the CleanTalk BlackLists DataBase in two ways:

- API Access: The API provides real-time updates and allows you to query IP addresses on demand. This is ideal for applications requiring the most up-to-date information.

- Database Files: You can also download database files, which are updated hourly. This method is suitable for offline processing or bulk operations.

For detailed pricing information and access levels, visit CleanTalk’s pricing page.

Integration Capabilities

Example Code for Checking VPN IPs Using CleanTalk API

This example demonstrates how to use the CleanTalk API to check IP addresses and block traffic based on specific conditions.

Logic of the Check:

1. If the network type is neither “hosting” nor “good_bots”, block the IP address if the data was updated within the last 7 days and the spam frequency is greater than 5.

2. Block IP addresses if the network type is “hosting”, “paid_vpn” or “tor”.

3. Allow IP addresses if the network type is “good_bots”.

import requests

import datetime

API_KEY = 'your_api_key'

IP_ADDRESS = 'IP_ADDRESS'

response = requests.get(f'https://api.cleantalk.org/?method_name=spam_check&auth_key={API_KEY}&ip={IP_ADDRESS}')

data = response.json()

ip_info = data['data'][IP_ADDRESS]

network_type = ip_info['network_type']

updated_date = datetime.datetime.strptime(ip_info['updated'], '%Y-%m-%d %H:%M:%S')

frequency = ip_info['frequency']

# Check logic

if network_type in ['hosting', 'tor', 'paid_vpn']:

print("Block this IP address")

elif network_type == 'good_bots':

print("Allow this IP address")

elif (network_type != 'hosting' and network_type != 'good_bots' and

(datetime.datetime.now() - updated_date).days <= 7 and frequency > 5):

print("Block this IP address")

else:

print("Allow this IP address")

Description of the Logic Fetching Data from CleanTalk API:

1. A request is sent to the CleanTalk API to get information about the IP address.

2. The JSON response is parsed to extract information about the IP address.

Checking Network Type:

1. If network_type is “hosting”, “paid_vpn” or “tor”, the IP address is blocked.

2. If network_type is “good_bots”, the IP address is allowed.

Additional Check:

1. If the network type is neither “hosting” nor “good_bots”, the updated_date and frequency are checked.

2. If the data was updated within the last 7 days and the spam frequency is greater than 5, the IP address is blocked.

3. Otherwise, the IP address is allowed.

This code allows you to configure traffic filtering based on the network type and other parameters provided by the CleanTalk API, ensuring the security of your site and preventing unwanted traffic. You can learn more about using the spam_check API here https://cleantalk.org/help/api-spam-check.

Benefits of Using CleanTalk for VPN Detection

Using CleanTalk for VPN detection offers several advantages:

- Comprehensive Coverage: CleanTalk’s database covers a wide range of IP addresses, including those used by VPNs, hosting services, and other potentially harmful networks.

- Real-Time Data: The API provides real-time data, ensuring you always have the most current information.

- Easy Integration: CleanTalk’s solutions are easy to integrate into your existing systems, offering flexibility and customization based on your specific needs.

- Enhanced Security: By effectively identifying and managing VPN traffic, you can better protect your website from spam, fraud, and other malicious activities.

For more information about CleanTalk BlackLists Database, visit CleanTalk BlackLists.

Detecting VPN IPs is crucial for maintaining the security and integrity of your website. CleanTalk’s BlackLists Database provides a robust solution for identifying and managing VPN traffic. With real-time API access and comprehensive database files, you can ensure your site remains secure and spam-free. Explore CleanTalk’s pricing options to find the right plan for your needs and start protecting your site today.