Do you know how to hide your WordPress usernames from bad bots? We are glad to introduce you a new Security plugin improvement: from now CleanTalk allows you to hide WordPress username from bad bots brute-force.

Before this improvement became available some bots could learn WordPress usernames by their ID and use it to brute-force these accounts later. For example, a request like «https://blog.cleantalk.org/?author=007» could return the username «https://blog.cleantalk.org/author/james_bond».

This option is switched off by default so in order to avoid vulnerabilities like that we highly recommend to switch it on.

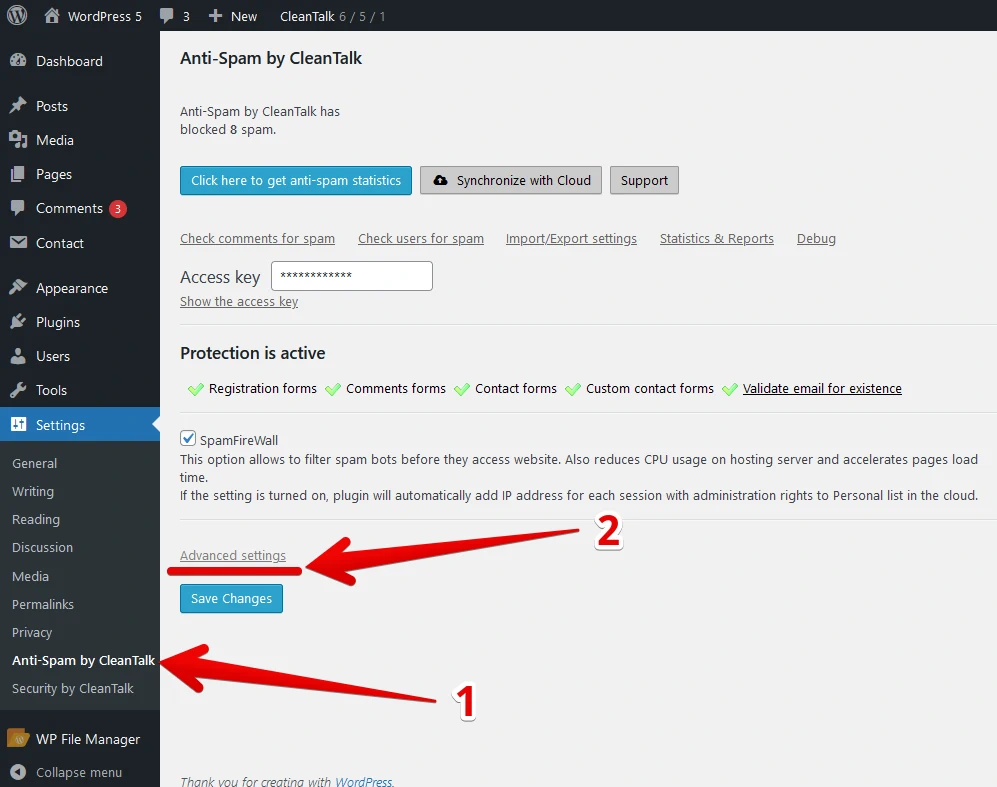

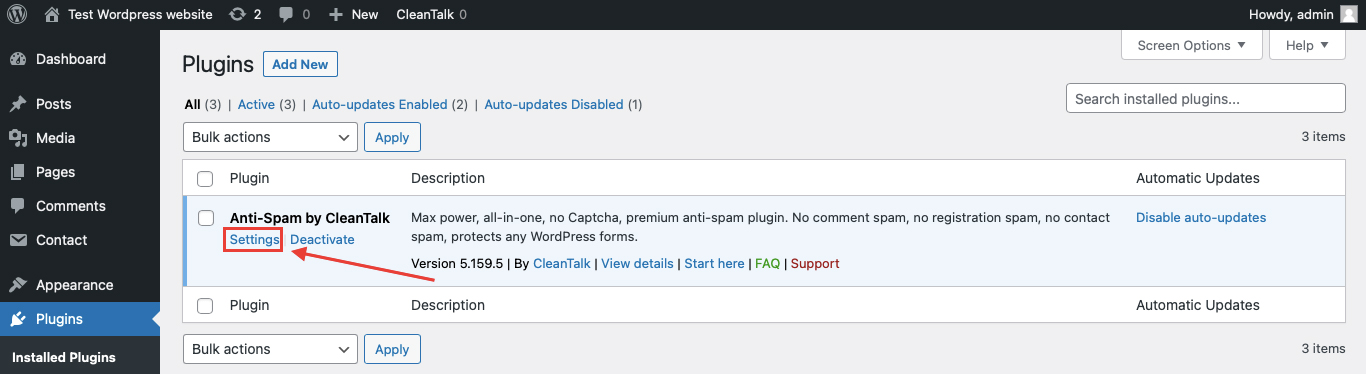

Step 1: Go to Plugins → Installed Plugins.

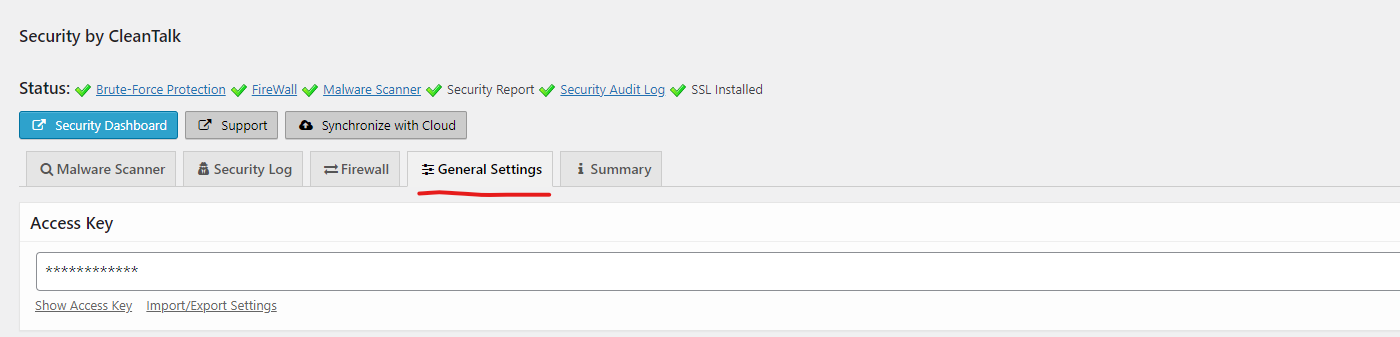

Step 2: Go to Settings beneath the Security plugin.

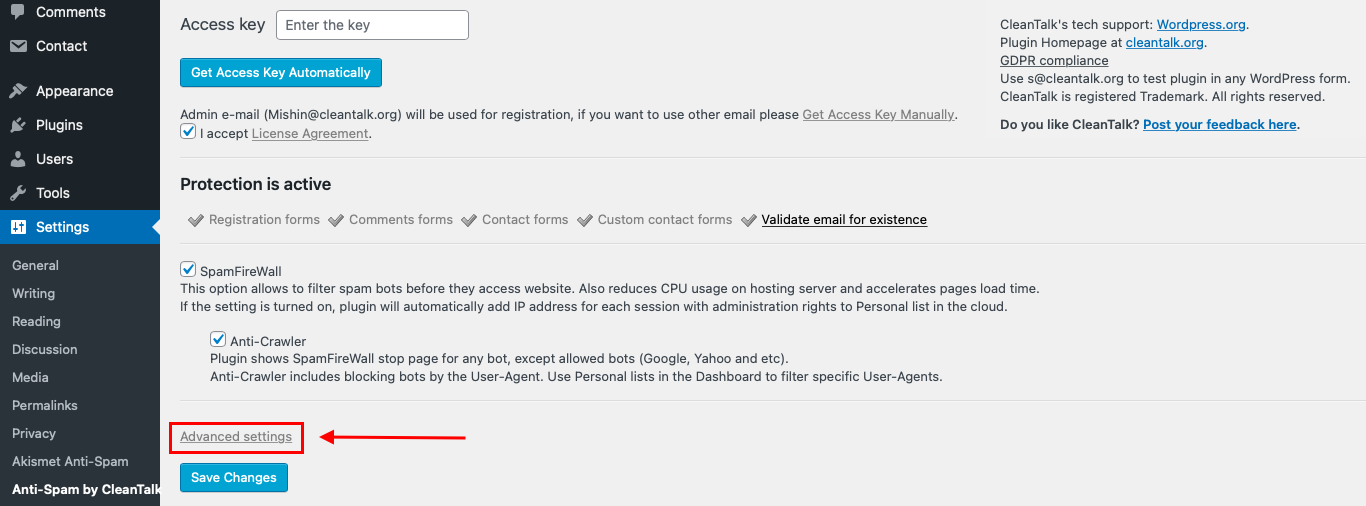

And after that choose General Settings.

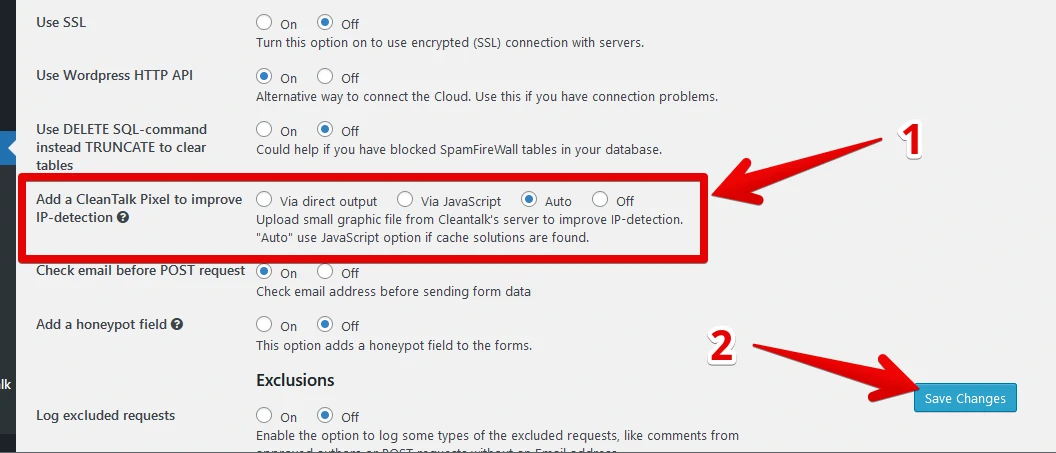

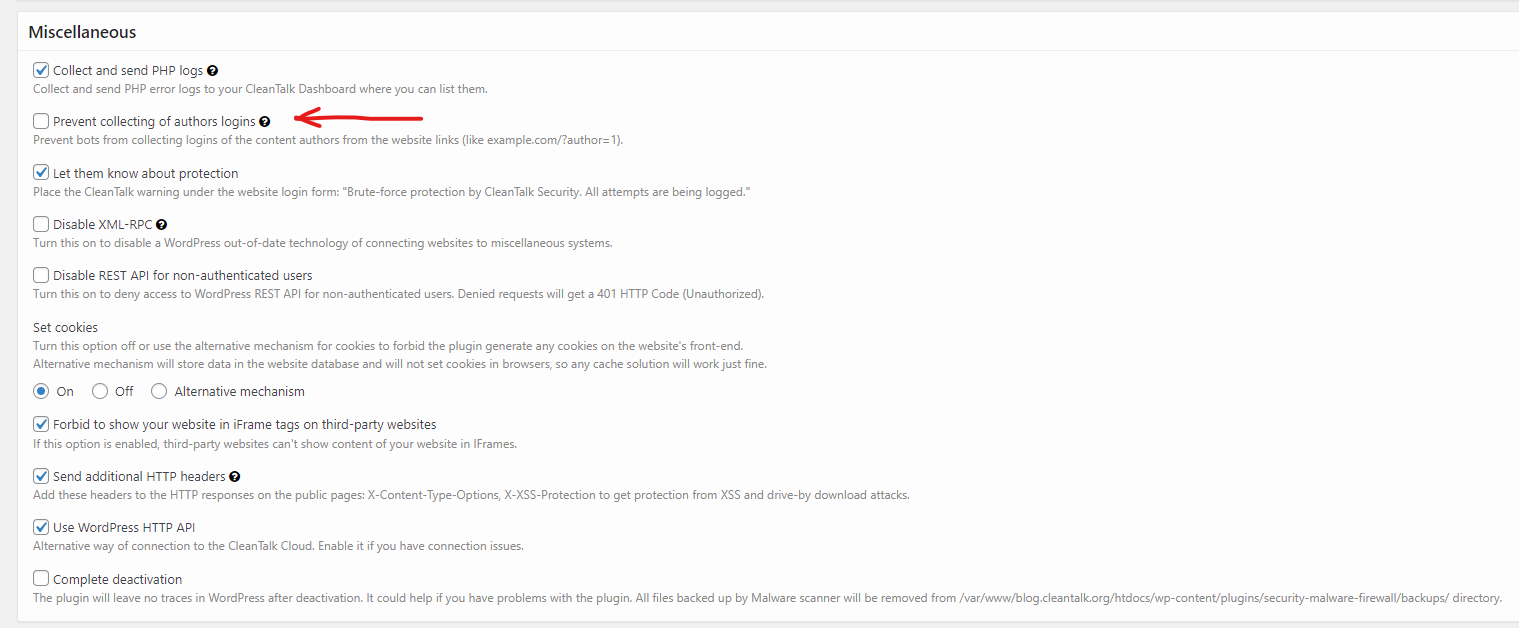

Step 3: Go to Miscellaneous section and find checkbox «Prevent collecting of authors logins» and just check this box.

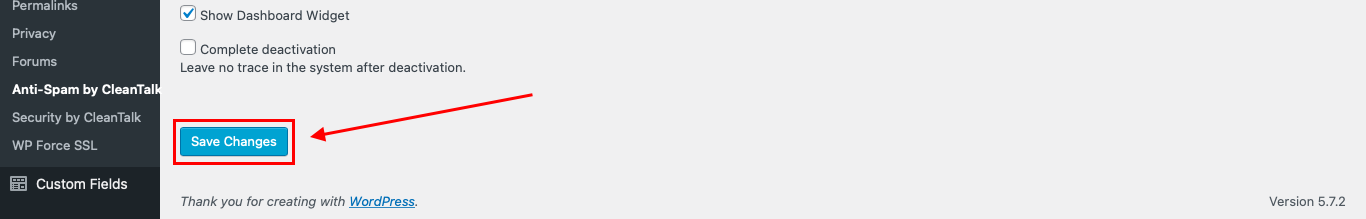

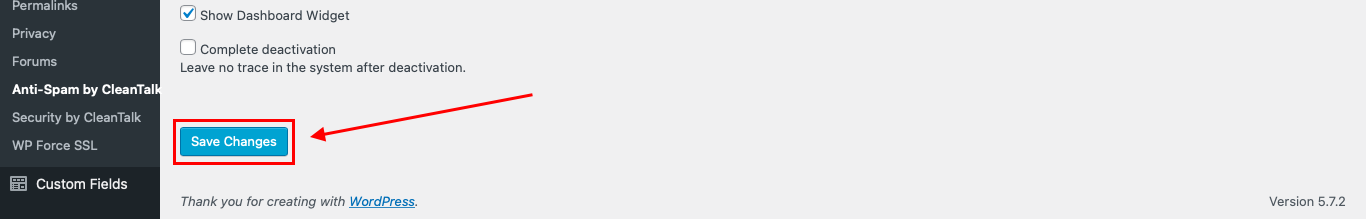

Step 4: Press the «Save Changes» button.

Success! That’s how quickly CleanTalk allows you to hide WordPress username from bad bots

If you have any questions, add a comment and we will be happy to help you.

Create your Cleantalk account – Register now and enjoy while CleanTalk Anti-Spam plugin protects your Clean and Simple Contact Forms from spam.