During interviews for the role, Linux/Unix administrator in many IT companies ask, what is load average, than Nginx is different from apache httpd, and what is a fork. In this article, I will try to explain what expect to hear in response to these questions, and why.

It is important to understand very well the basics of administration. In an ideal situation, when setting the system administrator tasks put a number of requirements. If the situation is not ideal, it is, in fact, the requirement for the administrator one: “I want to get everything working”. In other words, the service must be available 24/7 and if a decision does not meet these requirements (scalability and fault tolerance are available), we can say that the administrator did the job badly. But if the different solutions of two administrators work 24/7, how to understand which one is better?

A good system administrator when selecting solution under given requirements focuses on two conditions: minimum consumption of resources and their balanced distribution.

Option, when for the one expert is needed 10 servers for the job, and for the second expert only 2, we won’t be discussing that here is better – it is obvious. Further, under resources, I understand CPU, RAM and disk drive (HDD).

Let us consider the situation: one administrator has created a solution which requires 10% CPU, 5% ram and 10% hdd with all your equipment, and the second used 1% CPU, 40% ram, and 20% hdd. Which of these solutions is better? Here everything is not so obvious. Therefore, a good administrator should always be able to competently find a solution on the basis of available resources.

Imagine that we’re entry-level programmers, and we are asked to write a basic program for working with the network. The requirements are simple: you have to handle two connections simultaneously by protocol TCP and record what we have adopted in a file.

Before developing an application, you have to remember which tools offered by the Linux operating system (referred to in the article for all the examples only on the basis of this OS). In Linux we have a set of system calls (i.e., functions in the OS kernel, which we can call directly from our program, thereby forcibly giving CPU time to the kernel):

- socket — allocates space in the buffer of the OS kernel to fit our socket. The address of the allocated space is returned from the function in the program;

- bind — allows to change the information in the structure of the socket, which OS Linux has allocated for us on command socket;

- listen – as well as bind changes data in our structure, allowing you to specify the OS that we want to accept connections on this socket;

- connect – tells our OS that it needs to connect to another remote socket;

- accept – tells our OS that we want to take a new connection from another socket;

- read – we ask the OS to give us from its buffer a certain number of bytes, which it received from the remote socket;

- write – we ask the OS to send a certain number of bytes to the remote socket.

In order to establish a connection, we need to create a socket in the Linux memory l, write it in required data and to connect to the remote side.

socket → bind → connect → read/write

But if you trust the OS to make the selection of an outbound port for you (as well as the IP address), then bind won’t be necessary:

socket → connect → read/write

In order to receive incoming messages, we need to do:

socket → bind → listen → accept → read/write

We now know enough to write a program. Proceed directly to writing, using C. Why C? Because of the language of commands with the same name as system calls (with a few exceptions, like a fork).

The program differ1.c

// The port on which we listen

#define PORT_NO 2222

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <netinet/in.h>

int main(int argc, char *argv[])

{

// The buffer where we will read data from a socket

long buffersize=50;

int sockfd, newsockfd;

socklen_t clilen;

// The variable in which to store the address of our buffer

char *buffer;

struct sockaddr_in serv_addr, cli_addr;

FILE * resultfile;

// allocated memory

buffer = malloc (buffersize+1);

// open the file for writing our messages

resultfile = fopen("/tmp/nginx_vs_apache.log","a");

bzero((char *) &serv_addr, sizeof(serv_addr));

bzero(buffer,buffersize+1);

serv_addr.sin_family = AF_INET;

serv_addr.sin_addr.s_addr = INADDR_ANY;

serv_addr.sin_port = htons(PORT_NO);

// create a structure (a socket), here SOCK_STREAM it is tcp/ip socket.

sockfd = socket(AF_INET, SOCK_STREAM, 0);

if (sockfd < 0) error("ERROR opening socket");

// define the structure of the socket, we will listen on port 2222 on all ip addresses

if (bind(sockfd, (struct sockaddr *) &serv_addr, sizeof(serv_addr)) < 0) error("ERROR on binding");

// say our OS to accept incoming connections to our socket, maximum 50

listen(sockfd,50);

while (1) {

// in a closed loop process the incoming connection and read from them

newsockfd = accept(sockfd, (struct sockaddr *) &cli_addr, &clilen);

if (newsockfd < 0) error("ERROR on accept");

read(newsockfd,buffer,buffersize);

fprintf(resultfile, buffer);

fflush (resultfile);

}

close(sockfd);

return 0;

}

Compile and run our daemon:

[tolik@ localhost]$

[tolik@localhost]$ ./differ

Look what happened:

[root@ localhost]# ps axuf | grep [d]iffer

tolik 45409 0.0 0.0 4060 460 pts/12 S+ 01:14 0:00 | \_ ./differ

[root@localhost ]# netstat -tlnp | grep 2222

tcp 0 0 0.0.0.0:2222 0.0.0.0:* LISTEN 45409/./differ

[root@localhost ]# ls -lh /proc/45409/fd

итого 0

lrwx------ 1 tolik tolik 64 Апр 19 01:16 0 -> /dev/pts/12

lrwx------ 1 tolik tolik 64 Апр 19 01:16 1 -> /dev/pts/12

lrwx------ 1 tolik tolik 64 Апр 19 01:16 2 -> /dev/pts/12

l-wx------ 1 tolik tolik 64 Апр 19 01:16 3 -> /tmp/nginx_vs_apache.log

lrwx------ 1 tolik tolik 64 Апр 19 01:16 4 -> socket:[42663416]

[root@localhost ]# netstat -apeen | grep 42663416

tcp 0 0 0.0.0.0:2222 0.0.0.0:* LISTEN 500 42663416 45409/./differ

[root@localhost ]# strace -p 45409

Process 45409 attached - interrupt to quit

accept(4, ^C

Process 45409 detached

[root@localhost ]#

The process is in state sleep (S+ in the ps command).

This program will continue to execute (get the CPU time) only when a new connection on the port 2222. In all other cases, the program will never get CPU time: it will not even demand it from the OS and therefore will not affect the load avarage (hereafter LA), consuming only a memory.

On the other console, run the first client:

[tolik@localhost ]$ telnet localhost 2222

Connected to localhost.

Escape character is '^]'.

test client 1

Look at the file:

[root@localhost ]# cat /tmp/nginx_vs_apache.log

test client 1

Open a second connection:

[tolik@localhost ]$ telnet localhost 2222

Connected to localhost.

Escape character is '^]'.

test client 2

We see the result:

[root@localhost ]# cat /tmp/nginx_vs_apache.log

test client 1

The content of the file shows that only the first message has come from the first client. But the second message we have already sent, and it is somewhere. The OS provides all network connections, and then the message test client 2 is now in the buffer of the operating system memory, which is not available to us. The only way to get this data is to process a new connection by command accept, and then call read.

Let’s try to write something in the first client:

[tolik@localhost ]$ telnet localhost 2222

Connected to localhost.

Escape character is '^]'.

test client 1

blablabla

Check the log:

[root@localhost ]# cat /tmp/nginx_vs_apache.log

test client 1

New message has not got to the log. This is due to the fact that we call the read command only once, therefore, the log gets only the first message.

Let’s try to close our first connection:

[tolik@localhost ]$ telnet localhost 2222

Connected to localhost.

Escape character is '^]'.

test client 1

bla bla bla

^]

telnet> quit

Connection closed.

At this point, our program runs on the cycle next read and accept, therefore, receives the message from the second connection:

[root@localhost ]# cat /tmp/nginx_vs_apache.log

test client 1

test client 2

Our message bla bla bla never appeared, we’d already closed the socket, and the OS has cleared the buffer, thereby removing our data. We need to upgrade the program to read from a socket as long as the information comes out.

Program with an infinite read from socket differ2.c

#define PORT_NO 2222

#include <stdio.h>

#include <string.h>

#include <netinet/in.h>

int main(int argc, char *argv[])

{

int sockfd, newsockfd;

socklen_t clilen;

char buffer;

char * pointbuffer = &buffer;

struct sockaddr_in serv_addr, cli_addr;

FILE * resultfile;

resultfile = fopen("/tmp/nginx_vs_apache.log","a");

bzero((char *) &serv_addr, sizeof(serv_addr));

serv_addr.sin_family = AF_INET;

serv_addr.sin_addr.s_addr = INADDR_ANY;

serv_addr.sin_port = htons(PORT_NO);

sockfd = socket(AF_INET, SOCK_STREAM, 0);

if (sockfd < 0) error("ERROR opening socket");

if (bind(sockfd, (struct sockaddr *) &serv_addr, sizeof(serv_addr)) < 0) error("ERROR on binding");

listen(sockfd,50);

while (1) {

newsockfd = accept(sockfd, (struct sockaddr *) &cli_addr, &clilen);

if (newsockfd < 0) error("ERROR on accept");

while (read(newsockfd, pointbuffer,1)) {

fprintf(resultfile, pointbuffer);

fflush (resultfile);

}

}

close(sockfd);

return 0;

}

The program is not much different from the previous one. We added one cycle before the command read to get data from the socket as long as they arrive. Check.

Clean file:

[root@localhost ]# > /tmp/nginx_vs_apache.log

Compile and run:

[tolik@localhost ]$ gcc -o differ differ2.c

[tolik@localhost ]$ ./differ

First client:

[tolik@localhost ]$ telnet localhost 2222

Connected to localhost.

Escape character is '^]'.

client test 1

yoyoyo

Second client:

[tolik@localhost ]$ telnet localhost 2222

Connected to localhost.

Escape character is '^]'.

client test 2

yooyoy

Check what happened:

[root@localhost ]# cat /tmp/nginx_vs_apache.log

client test 1

yoyoyo

This time all is well, we took all of the data, but the problem remained: two connections are processed sequentially, one by one, and it does not fit our requirements. If we close the first connection (ctrl +]), the data from the second connection will be added directly in the log:

[root@localhost ]# cat /tmp/nginx_vs_apache.log

client test 1

yoyoyo

client test 2

yooyoy

The data came from. But how to process two connections in parallel? Here we comes to help the command fork. What does the system call fork in linux? The correct answer to this question on any job interview – nothing. Fork – outdated call, and it is presented in linux only for backward compatibility. In fact, calling command fork, you invoke the system call clone. Function clone creates a copy of the process and puts both processes in the queue to CPU. The difference between them is that a fork copies the data (variables, buffers, etc.) directly into the memory space of the child process, while clone copies the data to the child process only if you try to change them (see restrict memory access in the MMU). That is, if you call fork 10 times, and use the data to read-only, you will receive 10 identical copies of data in memory. And this is clearly not what you need, especially in multithread applications. Clone launches a copy of your application, but does not copy the data immediately. If you run clone 10 times, then you will have 10 executable processes with a single block of memory, and the memory will be copied only when you try to change the child process. Agree, the second algorithm is much more effective.

Program c fork differ3.c

#define PORT_NO 2222

#include

#include

#include <netinet/in.h>

int main(int argc, char *argv[])

{

int sockfd, newsockfd;

socklen_t clilen;

char buffer;

char * pointbuffer = &buffer;

struct sockaddr_in serv_addr, cli_addr;

FILE * resultfile;

int pid=1;

resultfile = fopen("/tmp/nginx_vs_apache.log","a");

bzero((char *) &serv_addr, sizeof(serv_addr));

serv_addr.sin_family = AF_INET;

serv_addr.sin_addr.s_addr = INADDR_ANY;

serv_addr.sin_port = htons(PORT_NO);

sockfd = socket(AF_INET, SOCK_STREAM, 0);

if (sockfd < 0) error("ERROR opening socket");

if (bind(sockfd, (struct sockaddr *) &serv_addr, sizeof(serv_addr)) < 0) error("ERROR on binding");

listen(sockfd,50);

while (pid!=0) {

newsockfd = accept(sockfd, (struct sockaddr *) &cli_addr, &clilen);

if (newsockfd < 0) error("ERROR on accept");

pid=fork();

if (pid!=0) {

close(newsockfd);

fprintf(resultfile,"New process was started with pid=%d\n",pid);

fflush (resultfile);

}

}

while (read(newsockfd, pointbuffer,1)) {

fprintf(resultfile, pointbuffer);

fflush (resultfile);

}

close(sockfd);

return 0;

}

In this program all the same — we do accept, accept a new connection. Next, we run fork. And if it is the master process (fork returned pid of the created process), then we close the current connection in the parent process (it is available in the parent and in the child process). If it’s a child process (fork returned 0), then we start to read from the open socket that we opened by command accept in the parent process. In fact it turns out that the parent process only accepts connections and read/write we do in child processes.

Compile and run:

[tolik@localhost ]$ gcc -o differ differ3.c

[tolik@localhost ]$ ./differ

Clean our log file:

[root@localhost ]# > /tmp/nginx_vs_apache.log

Look processes:

[root@localhost ]# ps axuf | grep [d]iffer

tolik 45643 0.0 0.0 4060 460 pts/12 S+ 01:40 0:00 | \_ ./differ

Client 1:

[tolik@localhost ]$ telnet localhost 2222

Connected to localhost.

Escape character is '^]'.

client 1 test

megatest

Client 2:

[tolik@localhost ]$ telnet localhost 2222

Connected to localhost.

Escape character is '^]'.

client2 test

yoyoyoy

Look processes:

[root@localhost ]# ps axuf | grep [d]iffer

tolik 45643 0.0 0.0 4060 504 pts/12 S+ 01:40 0:00 | \_ ./differ

tolik 45663 0.0 0.0 4060 156 pts/12 S+ 01:41 0:00 | \_ ./differ

tolik 45665 0.0 0.0 4060 160 pts/12 S+ 01:41 0:00 | \_ ./differ

We do not close both connections and can get something else to add, see our log:

[root@localhost ]# cat /tmp/nginx_vs_apache.log

New process was started with pid=44163

New process was started with pid=44165

client 1 test

megatest

client2 test

yoyoyoy

Two connections are processed at the same time – we got the desired result.

The program works, but not fast enough. It first accepts the connection and then runs the command fork, and the connection only handles one process. The question is: can multiple processes in Linux operating system to work with the same tcp port? Try.

Program c pre differ_prefork fork.c

#define PORT_NO 2222

#include

#include

#include <netinet/in.h>

int main(int argc, char *argv[])

{

int sockfd, newsockfd, startservers, count ;

socklen_t clilen;

char buffer;

char * pointbuffer = &buffer;

struct sockaddr_in serv_addr, cli_addr;

FILE * resultfile;

int pid=1;

resultfile = fopen("/tmp/nginx_vs_apache.log","a");

bzero((char *) &serv_addr, sizeof(serv_addr));

serv_addr.sin_family = AF_INET;

serv_addr.sin_addr.s_addr = INADDR_ANY;

serv_addr.sin_port = htons(PORT_NO);

sockfd = socket(AF_INET, SOCK_STREAM, 0);

if (sockfd < 0) error("ERROR opening socket");

if (bind(sockfd, (struct sockaddr *) &serv_addr, sizeof(serv_addr)) < 0) error("ERROR on binding");

listen(sockfd,50);

startservers=2;

count = 0;

while (pid!=0) {

if (count < startservers)

{

pid=fork();

if (pid!=0) {

close(newsockfd);

fprintf(resultfile,"New process was started with pid=%d\n",pid);

fflush (resultfile);

}

count = count + 1;

}

//sleep (1);

}

newsockfd = accept(sockfd, (struct sockaddr *) &cli_addr, &clilen);

if (newsockfd < 0) error("ERROR on accept");

while (read(newsockfd, pointbuffer,1)) {

fprintf(resultfile, pointbuffer);

fflush (resultfile);

}

close(sockfd);

return 0;

}

As you can see, the program still has not changed much, we just run a fork through the cycle. In this case, we create two child processes, and then each of them does accept to accept a new connection. Check.

Compile and run:

[tolik@localhost ]$ gcc -o differ differ_prefork.c

[tolik@localhost ]$ ./differ

Look processes:

[root@localhost ]# ps axuf | grep [d]iffer

tolik 44194 98.0 0.0 4060 504 pts/12 R+ 23:35 0:07 | \_ ./differ

tolik 44195 0.0 0.0 4060 152 pts/12 S+ 23:35 0:00 | \_ ./differ

tolik 44196 0.0 0.0 4060 156 pts/12 S+ 23:35 0:00 | \_ ./differ

We have not joined any of the clients, and the program has already twice made fork. What is happening with the system? For beginning the master process: it is a closed loop and checks whether it is necessary to fork other processes. If we do it without stopping, then, in fact, will constantly require from OS CPU time, as our cycle must always be executed. This means that we consume 100% of one kernel in the command ps value is 98.0%. The same can be seen in the command top:

[root@localhost ]# top -n 1 | head

top - 23:39:22 up 141 days, 21 min, 8 users, load average: 1.03, 0.59, 0.23

Tasks: 195 total, 2 running, 193 sleeping, 0 stopped, 0 zombie

Cpu(s): 0.3%us, 0.2%sy, 0.0%ni, 99.3%id, 0.2%wa, 0.0%hi, 0.0%si, 0.0%st

Mem: 1896936k total, 1876280k used, 20656k free, 151208k buffers

Swap: 4194296k total, 107600k used, 4086696k free, 1003568k cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

44194 tolik 20 0 4060 504 420 R 98.9 0.0 4:10.54 differ

44255 root 20 0 15028 1256 884 R 3.8 0.1 0:00.03 top

1 root 20 0 19232 548 380 S 0.0 0.0 2:17.17 init

If we connect the command strace to the parent, we won’t see anything, because our process does not cause any kernel functions:

[root@localhost ]# strace -p 44194

Process 44194 attached - interrupt to quit

^CProcess 44194 detached

[root@localhost ]#

What do child processes? Then the fun begins. Judging by the code, all of them after the fork should be hanging in a state of accept and expect new connections from the same port, in our case is 2222. Check:

[root@localhost ]# strace -p 44195

Process 44195 attached - interrupt to quit

accept(4, ^C

Process 44195 detached

[root@localhost ]# strace -p 44196

Process 44196 attached - interrupt to quit

accept(4, ^C

Process 44196 detached

At the moment, they do not require OS CPU time and consume only memory. But here’s the question: who of them will accept my connection, if I do telnet? Check:

[tolik@localhost ]$ telnet localhost 2222

Connected to localhost.

Escape character is '^]'.

client 1 test

hhh

[root@localhost ]# strace -p 44459

Process 44459 attached - interrupt to quit

read(5, ^C

Process 44459 detached

[root@localhost ]# strace -p 44460

Process 44460 attached - interrupt to quit

accept(4, ^C

Process 44460 detached

We see that the process that was created earlier (with a lower pid), processed connection of the first, and now is in the read state. If we start a second telnet, the connection will process the following process. After we have finished with the socket, we can close it and go again to the state accept (I do not, in order not to complicate the program).

Last question remains: what do we do with the parent process so that it does not consume so much cpu and it continued to work? We need to give time to other processes voluntarily, that is “to say” our OS, that for some time we do not need cpu. For this task suitable command sleep 1: if you uncomment it, you will see in strace picture, which repeats once per second:

[root@localhost ]# strace -p 44601

…..

rt_sigprocmask(SIG_BLOCK, [CHLD], [], 8) = 0

rt_sigaction(SIGCHLD, NULL, {SIG_DFL, [], 0}, 8) = 0

rt_sigprocmask(SIG_SETMASK, [], NULL, 8) = 0

nanosleep({1, 0}, 0x7fff60a15aa0) = 0

….

rt_sigprocmask(SIG_BLOCK, [CHLD], [], 8) = 0

rt_sigaction(SIGCHLD, NULL, {SIG_DFL, [], 0}, 8) = 0

rt_sigprocmask(SIG_SETMASK, [], NULL, 8) = 0

nanosleep({1, 0}, 0x7fff60a15aa0) = 0

…

etc.

Our process will get the CPU about once per second, or at least require it from the OS.

If you still don’t understand what this long article, then look at the apache httpd running in prefork mode:

[root@www /]# ps axuf | grep [h]ttpd

root 12730 0.0 0.5 271560 11916 ? Ss Feb25 3:14 /usr/sbin/httpd

apache 19832 0.0 0.3 271692 7200 ? S Apr17 0:00 \_ /usr/sbin/httpd

apache 19833 0.0 0.3 271692 7212 ? S Apr17 0:00 \_ /usr/sbin/httpd

apache 19834 0.0 0.3 271692 7204 ? S Apr17 0:00 \_ /usr/sbin/httpd

apache 19835 0.0 0.3 271692 7200 ? S Apr17 0:00 \_ /usr/sbin/httpd

Child processes in accept:

[root@www /]# strace -p 19832

Process 19832 attached

accept4(3, ^CProcess 19832 detached

[root@www /]# strace -p 19833

Process 19833 attached

accept4(3, ^CProcess 19833 detached

The master process with a second pause:

[root@www /]# strace -p 12730

Process 12730 attached

select(0, NULL, NULL, NULL, {0, 629715}) = 0 (Timeout)

wait4(-1, 0x7fff4c9e3fbc, WNOHANG|WSTOPPED, NULL) = 0

select(0, NULL, NULL, NULL, {1, 0}) = 0 (Timeout)

wait4(-1, 0x7fff4c9e3fbc, WNOHANG|WSTOPPED, NULL) = 0

select(0, NULL, NULL, NULL, {1, 0}) = 0 (Timeout)

wait4(-1, 0x7fff4c9e3fbc, WNOHANG|WSTOPPED, NULL) = 0

When you start the httpd master process spawns child processes, it is easy to see if you run strace on the master process at the time of the launch:

Run a web server with the following settings:

StartServers 1

MinSpareServers 9

MaxSpareServers 10

ServerLimit 10

MaxClients 10

MaxRequestsPerChild 1

These settings say that each child process will handle only a one request, and then process will be killed. The minimum number of processes in accept is 9 and maximum is 10.

If you run strace on the master process at the start, we see how the master calls the clone until it reaches MinSpareServers.

Tracing

rt_sigaction(SIGSEGV, {0x7f9991933c20, [], SA_RESTORER|SA_RESETHAND, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGBUS, {0x7f9991933c20, [], SA_RESTORER|SA_RESETHAND, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGABRT, {0x7f9991933c20, [], SA_RESTORER|SA_RESETHAND, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGILL, {0x7f9991933c20, [], SA_RESTORER|SA_RESETHAND, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGFPE, {0x7f9991933c20, [], SA_RESTORER|SA_RESETHAND, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGTERM, {0x7f999193de50, [], SA_RESTORER, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGWINCH, {0x7f999193de50, [], SA_RESTORER, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGINT, {0x7f999193de50, [], SA_RESTORER, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGXCPU, {SIG_DFL, [], SA_RESTORER, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGXFSZ, {SIG_IGN, [], SA_RESTORER, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGPIPE, {SIG_IGN, [], SA_RESTORER, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGHUP, {0x7f999193de80, [HUP USR1], SA_RESTORER, 0x7f99901dd500}, NULL, 8) = 0

rt_sigaction(SIGUSR1, {0x7f999193de80, [HUP USR1], SA_RESTORER, 0x7f99901dd500}, NULL, 8) = 0

clone(child_stack=0, flags=CLONE_CHILD_CLEARTID|CLONE_CHILD_SETTID|SIGCHLD, child_tidptr=0x7f99918eeab0) = 13098

write(2, "[Wed Jan 25 13:24:39 2017] [noti"..., 114) = 114

wait4(-1, 0x7fffae295fdc, WNOHANG|WSTOPPED, NULL) = 0

select(0, NULL, NULL, NULL, {1, 0}) = 0 (Timeout)

clone(child_stack=0, flags=CLONE_CHILD_CLEARTID|CLONE_CHILD_SETTID|SIGCHLD, child_tidptr=0x7f99918eeab0) = 13099

wait4(-1, 0x7fffae295fdc, WNOHANG|WSTOPPED, NULL) = 0

select(0, NULL, NULL, NULL, {1, 0}) = 0 (Timeout)

clone(child_stack=0, flags=CLONE_CHILD_CLEARTID|CLONE_CHILD_SETTID|SIGCHLD, child_tidptr=0x7f99918eeab0) = 13100

clone(child_stack=0, flags=CLONE_CHILD_CLEARTID|CLONE_CHILD_SETTID|SIGCHLD, child_tidptr=0x7f99918eeab0) = 13101

wait4(-1, 0x7fffae295fdc, WNOHANG|WSTOPPED, NULL) = 0

select(0, NULL, NULL, NULL, {1, 0}) = 0 (Timeout)

clone(child_stack=0, flags=CLONE_CHILD_CLEARTID|CLONE_CHILD_SETTID|SIGCHLD, child_tidptr=0x7f99918eeab0) = 13102

clone(child_stack=0, flags=CLONE_CHILD_CLEARTID|CLONE_CHILD_SETTID|SIGCHLD, child_tidptr=0x7f99918eeab0) = 13103

clone(child_stack=0, flags=CLONE_CHILD_CLEARTID|CLONE_CHILD_SETTID|SIGCHLD, child_tidptr=0x7f99918eeab0) = 13104

clone(child_stack=0, flags=CLONE_CHILD_CLEARTID|CLONE_CHILD_SETTID|SIGCHLD, child_tidptr=0x7f99918eeab0) = 13105

wait4(-1, 0x7fffae295fdc, WNOHANG|WSTOPPED, NULL) = 0

select(0, NULL, NULL, NULL, {1, 0}) = 0 (Timeout)

clone(child_stack=0, flags=CLONE_CHILD_CLEARTID|CLONE_CHILD_SETTID|SIGCHLD, child_tidptr=0x7f99918eeab0) = 13106

clone(child_stack=0, flags=CLONE_CHILD_CLEARTID|CLONE_CHILD_SETTID|SIGCHLD, child_tidptr=0x7f99918eeab0) = 13107

wait4(-1, 0x7fffae295fdc, WNOHANG|WSTOPPED, NULL) = 0

select(0, NULL, NULL, NULL, {1, 0}) = 0 (Timeout)

Watch how apache starts – it can easy to look ps axuf | grep [h]ttp every second immediately after the start.

Start apache

[root@www /]# date; ps axuf | grep [h]ttp

Wed Jan 25 14:12:10 EST 2017

root 13342 2.5 0.4 271084 9384? Ss 14:12 0:00 /usr/sbin/httpd

apache 13344 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

[root@www /]# date; ps axuf | grep [h]ttp

Wed Jan 25 14:12:11 EST 2017

root 13342 1.6 0.4 271084 9384? Ss 14:12 0:00 /usr/sbin/httpd

apache 13344 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13348 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

[root@www /]# date; ps axuf | grep [h]ttp

Wed Jan 25 14:12:11 EST 2017

root 13342 2.0 0.4 271084 9384? Ss 14:12 0:00 /usr/sbin/httpd

apache 13344 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13348 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13352 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13353 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

[root@www /]# date; ps axuf | grep [h]ttp

Wed Jan 25 14:12:12 EST 2017

root 13342 1.7 0.4 271084 9384? Ss 14:12 0:00 /usr/sbin/httpd

apache 13344 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13348 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13352 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13353 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13357 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13358 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13359 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13360 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

[root@www /]# date; ps axuf | grep [h]ttp

Wed Jan 25 14:12:13 EST 2017

root 13342 1.4 0.4 271084 9384? Ss 14:12 0:00 /usr/sbin/httpd

apache 13344 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13348 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13352 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13353 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13357 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13358 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13359 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13360 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13364 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

apache 13365 0.0 0.2 271084 5232? S 14:12 0:00 _ /usr/sbin/httpd

[root@www /]#

So, we have several child processes that are ready to make our http request. Let’s try to send the request:

[root@www /]# wget -O /dev/null http://localhost

--2017-01-25 14:04:00-- http://localhost/

Resolving localhost... ::1, 127.0.0.1

Connecting to localhost|::1|:80... failed: Connection refused.

Connecting to localhost|127.0.0.1|:80... connected.

HTTP request sent, awaiting response... 403 Forbidden

2017-01-25 14:04:00 ERROR 403: Forbidden.

Apache answered us 403, look at the process:

root 13342 0.0 0.4 271084 9384 ? Ss 14:12 0:00 /usr/sbin/httpd

apache 13348 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13352 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13353 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13357 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13358 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13359 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13360 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13364 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13365 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

As you can see, the process with minimal pid has processed the request and concluded its work:

apache 13344 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

Child processes we have got 9 that fits into our limit MinSpareServers.

Try again to send the request:

[root@www /]# wget -O /dev/null http://localhost

--2017-01-25 14:15:47-- http://localhost/

Resolving localhost... ::1, 127.0.0.1

Connecting to localhost|::1|:80... failed: Connection refused.

Connecting to localhost|127.0.0.1|:80... connected.

HTTP request sent, awaiting response... 403 Forbidden

2017-01-25 14:15:47 ERROR 403: Forbidden.

[root@www /]# ps axuf | grep [h]ttp

root 13342 0.0 0.4 271084 9384 ? Ss 14:12 0:00 /usr/sbin/httpd

apache 13352 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13353 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13357 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13358 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13359 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13360 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13364 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13365 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13373 0.0 0.2 271084 5232 ? S 14:15 0:00 \_ /usr/sbin/httpd

This time our request was processed the process

apache 13348 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

because now it has minimal pid.

But we have got 8 free child processes in accept, one is not enough to MinSpareServers, so the master process have created a new process:

apache 13373 0.0 0.2 271084 5232 ? S 14:15 0:00 \_ /usr/sbin/httpd

Let’s say our OS that it does not give the CPU time to the master process of apache:

[root@www /]# kill -SIGSTOP 13342

The process status has changed, now it doesn’t work.

Check whether we have a web server is running:

[root@www /]# wget -O /dev/null http://localhost

--2017-01-25 14:20:12-- http://localhost/

Resolving localhost... ::1, 127.0.0.1

Connecting to localhost|::1|:80... failed: Connection refused.

Connecting to localhost|127.0.0.1|:80... connected.

HTTP request sent, awaiting response... 403 Forbidden

2017-01-25 14:20:12 ERROR 403: Forbidden.

Oh yes, still running, the web server is still responding.

Look what we have with the process:

root 13342 0.0 0.4 271084 9384 ? Ts 14:12 0:00 /usr/sbin/httpd

apache 13352 0.0 0.0 0 0 ? Z 14:12 0:00 \_ [httpd]

apache 13353 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13357 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13358 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13359 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13360 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13364 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13365 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13373 0.0 0.2 271084 5232 ? S 14:15 0:00 \_ /usr/sbin/httpd

Our next request was processed by the next child process, which worked and left. But it left the exit code, which must be processed by the master process. Since the master process is stopped, then the exit code is in the kernel in the process table, and though we don’t have a process there, but in the table it is marked as a zombie.

apache 13352 0.0 0.0 0 0 ? Z 14:12 0:00 \_ [httpd]

Naturally the child processes we have 8 new, as the new 9th produce none, master stopped.

Let’s experiment will send another http request:

[root@www /]# wget -O /dev/null http://localhost

--2017-01-25 14:25:03-- http://localhost/

Resolving localhost... ::1, 127.0.0.1

Connecting to localhost|::1|:80... failed: Connection refused.

Connecting to localhost|127.0.0.1|:80... connected.

HTTP request sent, awaiting response... 403 Forbidden

2017-01-25 14:25:03 ERROR 403: Forbidden.

[root@www /]# ps axuf | grep [h]ttp

root 13342 0.0 0.4 271084 9384 ? Ts 14:12 0:00 /usr/sbin/httpd

apache 13352 0.0 0.0 0 0 ? Z 14:12 0:00 \_ [httpd]

apache 13353 0.0 0.0 0 0 ? Z 14:12 0:00 \_ [httpd]

apache 13357 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13358 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13359 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13360 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13364 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13365 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13373 0.0 0.2 271084 5232 ? S 14:15 0:00 \_ /usr/sbin/httpd

Logically, the situation is repeated.

Let’s say our OS is that the master process can continue to run again:

[root@www /]# kill -SIGCONT 13342

[root@www /]# ps axuf | grep [h]ttp

root 13342 0.0 0.4 271084 9384 ? Ss 14:12 0:00 /usr/sbin/httpd

apache 13357 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13358 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13359 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13360 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13364 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13365 0.0 0.2 271084 5232 ? S 14:12 0:00 \_ /usr/sbin/httpd

apache 13373 0.0 0.2 271084 5232 ? S 14:15 0:00 \_ /usr/sbin/httpd

apache 13388 0.0 0.2 271084 5232 ? S 14:26 0:00 \_ /usr/sbin/httpd

apache 13389 0.0 0.2 271084 5232 ? S 14:26 0:00 \_ /usr/sbin/httpd

apache 13390 0.0 0.2 271084 5232 ? S 14:26 0:00 \_ /usr/sbin/httpd

The master process immediately read exit code of child processes, and references to them are gone from the process table and the missing processes in the master process to clone again — we now have 10 free processes in accept that fit into the framework of our variables from configs.

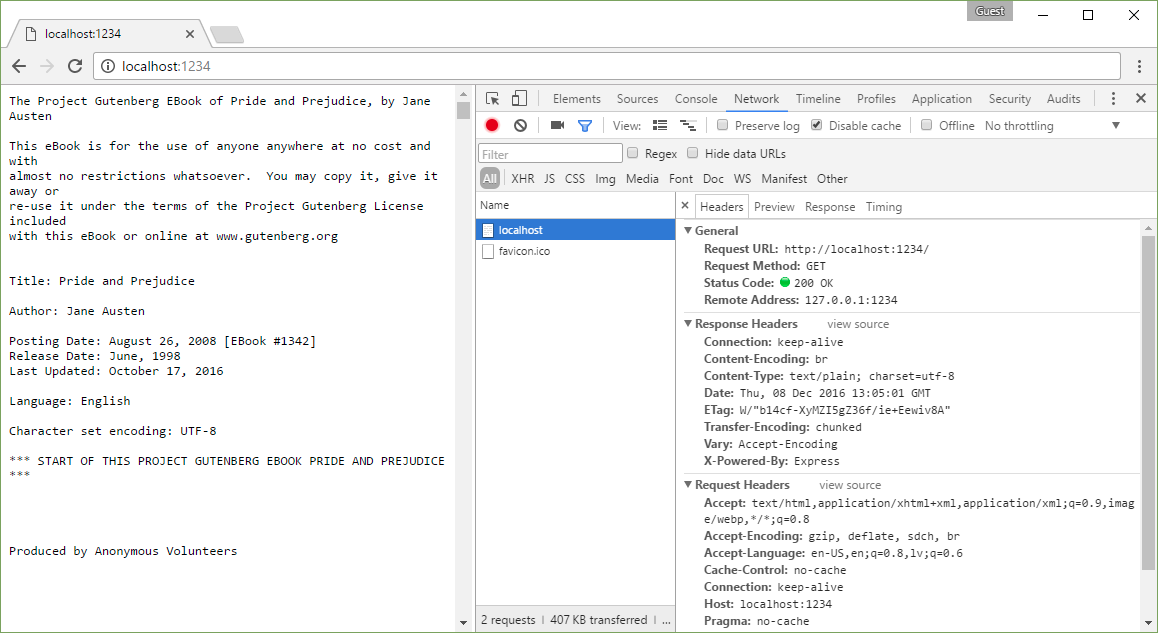

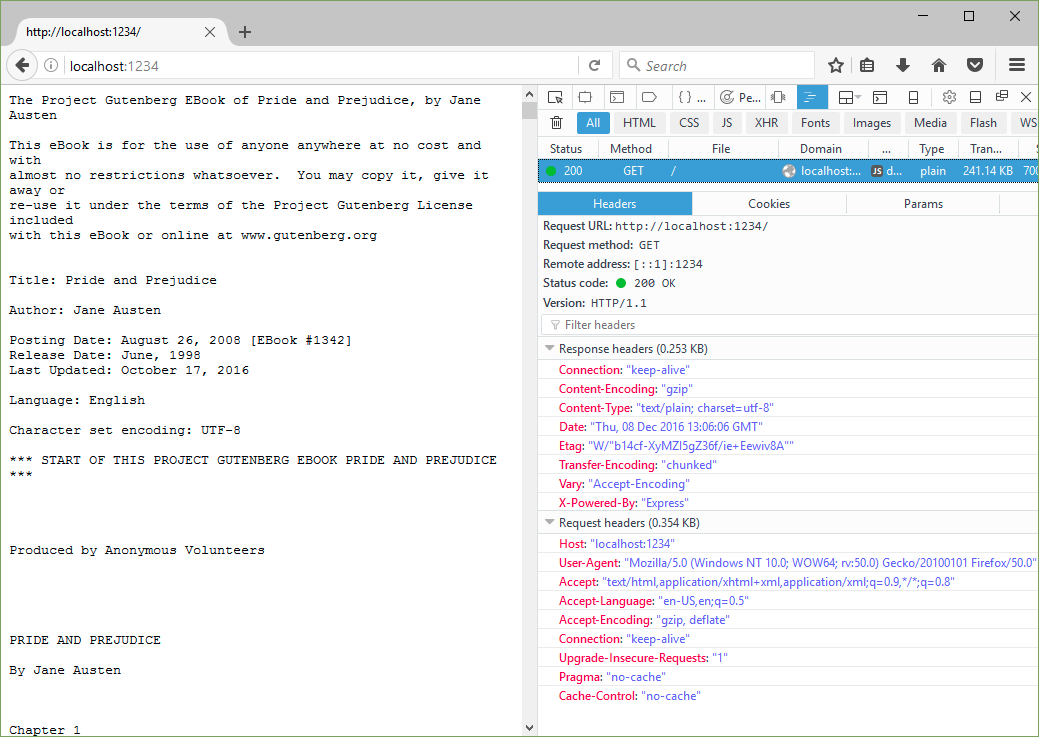

How does nginx? As you know, the system call accept blocks execution of our program as long as no new connection will come. It turns out that we can’t expect a new connection and process already open connection in one process. Or?

Take a look at the code:

Code with select

#define PORT 2222

#include

#include

#include

#include

#include

#include <arpa/inet.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <sys/time.h>

int main(int argc , char *argv[])

{

int opt = 1;

int master_socket , addrlen , new_socket , client_socket[30] , max_clients = 30 , activity, i , valread , sd;

int max_sd;

FILE * resultfile;

struct sockaddr_in address;

char buffer[50];

fd_set readfds;

resultfile = fopen("/tmp/nginx_vs_apache.log","a");

// Fill our array of sockets with zeros

for (i = 0; i < max_clients; i++) client_socket[i] = 0;

if( (master_socket = socket(AF_INET , SOCK_STREAM , 0)) == 0) error("socket failed");

address.sin_family = AF_INET;

address.sin_addr.s_addr = INADDR_ANY;

address.sin_port = htons( PORT );

if (bind(master_socket, (struct sockaddr *)&address, sizeof(address))<0) error("bind failed");

if (listen(master_socket, 3) < 0) error("listen");

addrlen = sizeof(address);

while(1) //in an endless loop process requests

{

FD_ZERO(&readfds);

FD_SET(master_socket, &readfds);

max_sd = master_socket;

for ( i = 0 ; i < max_clients ; i++) { sd = client_socket[i]; if(sd > 0) FD_SET( sd , &readfds);

if(sd > max_sd) max_sd = sd;

}

// Waiting for events on any of sockets we are interested in

activity = select( max_sd + 1 , &readfds , NULL , NULL , NULL);

if ((activity < 0) && (errno!=EINTR)) printf("select error");

// Processing a new connection

if (FD_ISSET(master_socket, &readfds))

{

if ((new_socket = accept(master_socket, (struct sockaddr *)&address, (socklen_t*)&addrlen))<0) error("accept");

for (i = 0; i < max_clients; i++)

if( client_socket[i] == 0 ) { client_socket[i] = new_socket; break; }

}

// Read data from each socket, as we do not know what events caused the OS to give us the CPU

for (i = 0; i < max_clients; i++)

{

sd = client_socket[i];

if (FD_ISSET( sd , &readfds))

{

if ((valread = read( sd , buffer, 1024)) == 0) { close( sd ); client_socket[i] = 0; }

else

{

buffer[valread] = '\0';

fprintf(resultfile, buffer);

fflush (resultfile);

}

}

}

}

return 0;

}

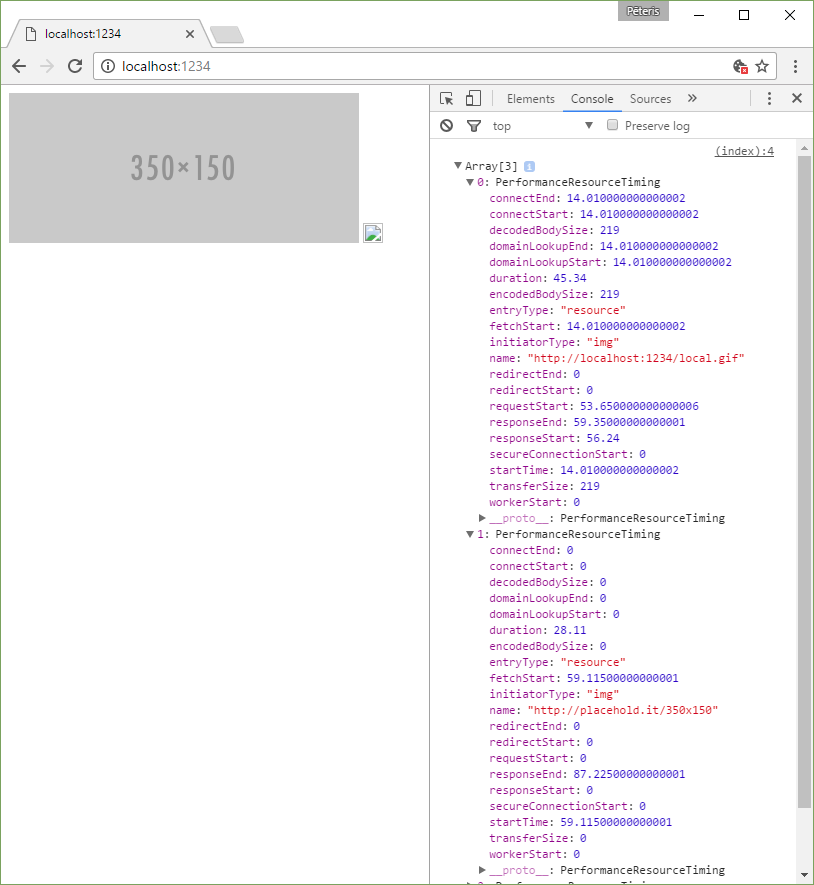

This code looks a bit more complicated than the previous one, but it’s quite easy to explain. For example, in the process need to handle a maximum of 30 connections. We create an array of zeros. As soon as we come to the new connection, we are processing it, and the socket address is recorded in this array. Going through the entire array and all of our sockets, we can consistently read information from them. But how do we know about the new connection without the use of accept? In linux for that there are at least 3 functions: select, poll and epoll. And in freebsd this is the analogue of the function epoll called kqueue (kernel queue). What these commands do? select the oldest feature which is still used in order to give all CPU time to the kernel, requesting it only when certain conditions (like accept). The difference is that the kernel will return cpu to us, when we have specified sockets will start any activity. Because when you start the program open only one socket in select we specify one. If we connect via Telnet to our daemon, in select we need to specify two sockets: the master socket on port 2222 and one that has connected to us. To make it clearer, demonstrate:

[tolik@101host nginx_vs_apache]$ ./differ &

[1] 44832

[tolik@101host nginx_vs_apache]$ ps axuf | grep [.]/differ

tolik 44832 0.0 0.0 4060 448 pts/0 S 22:47 0:00 \_ ./differ

[root@localhost ]# strace -p 44832

Process 44832 attached - interrupt to quit

select(5, [4], NULL, NULL, NULL) = 1 (in [4])

At this point we make another console telnet on the port 2222 in our daemon and look at the trace:

accept(4, {sa_family=AF_INET, sin_port=htons(41130), sin_addr=inet_addr("127.0.0.1")}, [16]) = 5

select(6, [4 5], NULL, NULL, NULL^C

Process 44832 detached

[root@localhost ]# ls -lh /proc/44832/fd

итого 0

lrwx------ 1 tolik tolik 64 Апр 19 00:26 0 -> /dev/pts/12

lrwx------ 1 tolik tolik 64 Апр 19 00:26 1 -> /dev/pts/12

lrwx------ 1 tolik tolik 64 Апр 19 00:21 2 -> /dev/pts/12

l-wx------ 1 tolik tolik 64 Апр 19 00:26 3 -> /tmp/nginx_vs_apache.log

lrwx------ 1 tolik tolik 64 Апр 19 00:26 4 -> socket:[42651147]

lrwx------ 1 tolik tolik 64 Апр 19 00:26 5 -> socket:[42651320]

[root@localhost ]# netstat -apeen | grep 42651147

tcp 0 0 0.0.0.0:2222 0.0.0.0:* LISTEN 500 42651147 44832/./differ

[root@localhost ]# netstat -apeen | grep 42651320

tcp 0 0 127.0.0.1:2222 127.0.0.1:41130 ESTABLISHED 500 42651320 44832/./differ

First, the command select we have specified the socket 4 (see in brackets). According /proc we learned that the 4th file descriptor is a socket with the number 42651147. According to netstat, we found out that the socket number is our socket in state listen of port 2222. Once we have connected to this socket, the OS made a tcp handshake with our telnet client and installed the new connection, which is notified via the application select. Our program has received the CPU time and began to process an empty array with connections. Seeing that it is a new connection, we run the command accept, knowing that it will not block the program from running, because the connection is already present. In fact, we use the same accept, only in non-blocking mode.

After we have completed the connection, we again gave control of the linux kernel, but told him that now we want to receive a notice on two sockets, under the number 4 and 5, which is very clearly visible in the command strace ([4 5]). This is how nginx works: it can handle a large number of sockets by one process. According to the existing sockets, we can conduct operations read/write, according to the new can call accept. Select is a very old system call has a number of limitations: for example, the maximum number of connections (file descriptors). It was replaced by a more perfect system call poll, devoid of these limits and working faster. Subsequently appeared epoll and kqueue (in freebsd). More advanced functions allow you to work more effectively with the connection.

Which of these functions supports nginx? Nginx is able to work with all these features.

A link to the documentation. In this article I will not describe the differences between all these functions, because the amount of text is already big enough.

Nginx uses fork to create processes and to load all kernels on the server. But every single child process running nginx with multiple connections in the same way as in the example with select, only uses modern features for this (for linux the default is epoll). Take a look:

[root@localhost ]# ps axuf| grep [n]ginx

root 232753 0.0 0.0 96592 556 ? Ss Feb25 0:00 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.conf

nginx 232754 0.0 0.0 97428 1400 ? S Feb25 5:20 \_ nginx: worker process

nginx 232755 0.0 0.0 97460 1364 ? S Feb25 5:02 \_ nginx: worker process

[root@localhost ]# strace -p 232754

Process 232754 attached - interrupt to quit

epoll_wait(12, ^C

Process 232754 detached

[root@localhost ]# strace -p 232755

Process 232755 attached - interrupt to quit

epoll_wait(14, {}, 512, 500) = 0

epoll_wait(14, ^C

Process 232755 detached

What makes a parent master process nginx?

[root@localhost ]# strace -p 232753

Process 232753 attached - interrupt to quit

rt_sigsuspend([]^C

Process 232753 detached

It does not accept incoming connections, and only waiting for a signal from the OS. At the signal, nginx is able to a lot of interesting things, for example, to reopen file descriptors, which is useful when log rotation, or to reread the configuration file.

All interaction between processes nginx carries using unix sockets:

[root@localhost ]# ls -lh /proc/232754/fd

итого 0

lrwx------ 1 nginx nginx 64 Апр 8 13:20 0 -> /dev/null

lrwx------ 1 nginx nginx 64 Апр 8 13:20 1 -> /dev/null

lrwx------ 1 nginx nginx 64 Апр 8 13:20 10 -> socket:[25069547]

lrwx------ 1 nginx nginx 64 Апр 8 13:20 11 -> socket:[25069551]

lrwx------ 1 nginx nginx 64 Апр 8 13:20 12 -> anon_inode:[eventpoll]

lrwx------ 1 nginx nginx 64 Апр 8 13:20 13 -> anon_inode:[eventfd]

l-wx------ 1 nginx nginx 64 Апр 8 13:20 2 -> /var/log/nginx/error.log

lrwx------ 1 nginx nginx 64 Апр 8 13:20 3 -> socket:[25069552]

l-wx------ 1 nginx nginx 64 Апр 8 13:20 5 -> /var/log/nginx/error.log

l-wx------ 1 nginx nginx 64 Апр 8 13:20 6 -> /var/log/nginx/access.log

lrwx------ 1 nginx nginx 64 Апр 8 13:20 9 -> socket:[25069546]

[root@localhost ]# netstat -apeen | grep 25069547

tcp 0 0 172.16.0.1:80 0.0.0.0:* LISTEN 0 25069547 232753/nginx

[root@localhost ]# netstat -apeen | grep 25069551

unix 3 [ ] STREAM CONNECTED 25069551 232753/nginx

Result

Before choosing the tools, it is important to understand exactly how they work. So in some cases it is better to use only apache without httpd nginx – and vice versa. But most often these products are used together, because the parallel processing of sockets in apache is engaged by OS (different processes), and parallel processing of sockets in nginx does nginx itself.

P.S.

If after compiling programs do not run, make sure that you do not open the connection on port 2222. In the program I did not handle the situation by closing the sockets, and they can be some time still open in different states from the old demons. If the program does not start, just wait until all sockets are closed by timeout.

This text is a translation of the article “Разница между nginx и apache с примерами” published by @mechanicusilius on habrahabr.ru.

About the CleanTalk service

CleanTalk is a cloud service to protect websites from spambots. CleanTalk uses protection methods that are invisible to the visitors of the website. This allows you to abandon the methods of protection that require the user to prove that he is a human (captcha, question-answer etc.).