Hello,

We are pleased to announce the release of the anti-spam plugin for Shopify. Spam Protection. No captcha by CleanTalk protects registrations and orders from spam, the app is available for installation from the Shopify app catalog https://apps.shopify.com/cleantalk.

CleanTalk provides spam protection that invisible to visitors without using captcha or other methods when visitors have to prove that they are real peoples.

What anti-spam features are available to you after installing CleanTalk Anti-Spam

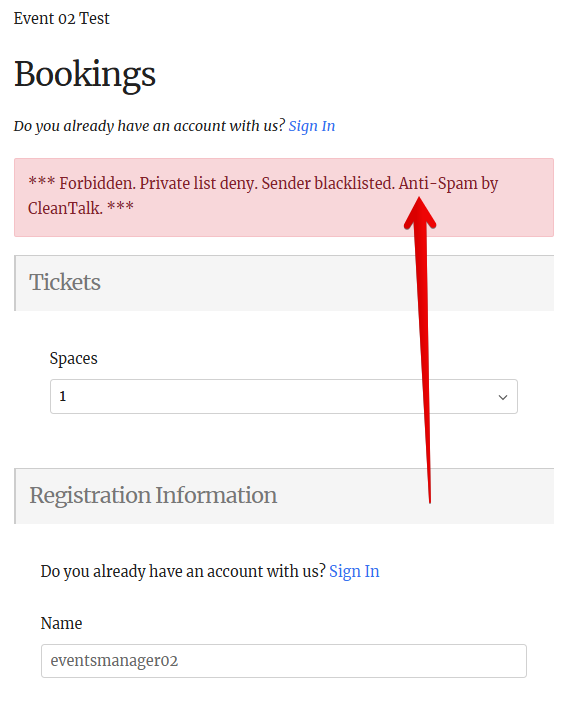

Spam Attacks log – all of the requests(allowed and denied) saved in the log and you can view the details of each request and includes data/time, IP/email and all necessary information in the details — block reason, a body of the message, additionally caught data.

Personal lists by:

Stop Words – all requests that contain stop words will be blocked. This can help stop profanity in texts and stop spam by specific words.

Personal lists support for records by IP, IP Network, Email, Country. You can add records to your personal black or white lists.

Real-Time Email Address Existence Validation and Filtering Disposable & Temporary Emails – will help to avoid not only spam but also fake email addresses.

Instructions for installing the Anti-Spam app for Shopify is here https://cleantalk.org/help/install-shopify

If you have any questions, add a comment and we will be happy to help you.