As you know DDoS attacks on the site are of different intensity, it is important to the number of hosts involved in the attack, the number of network packets and the amount of data transmitted. In the most severe cases, it is possible to repel the attack only using specialized equipment and services.

If the volume of the attack is less than the bandwidth of the network equipment and the computing power of the server (server pool) serving the site, you can try to “block” the attack without resorting to third-party services, namely to include a program filter of traffic coming to the site. This filter will filter out the traffic of bots participating in the attack, while skipping legitimate traffic of “live” site visitors.

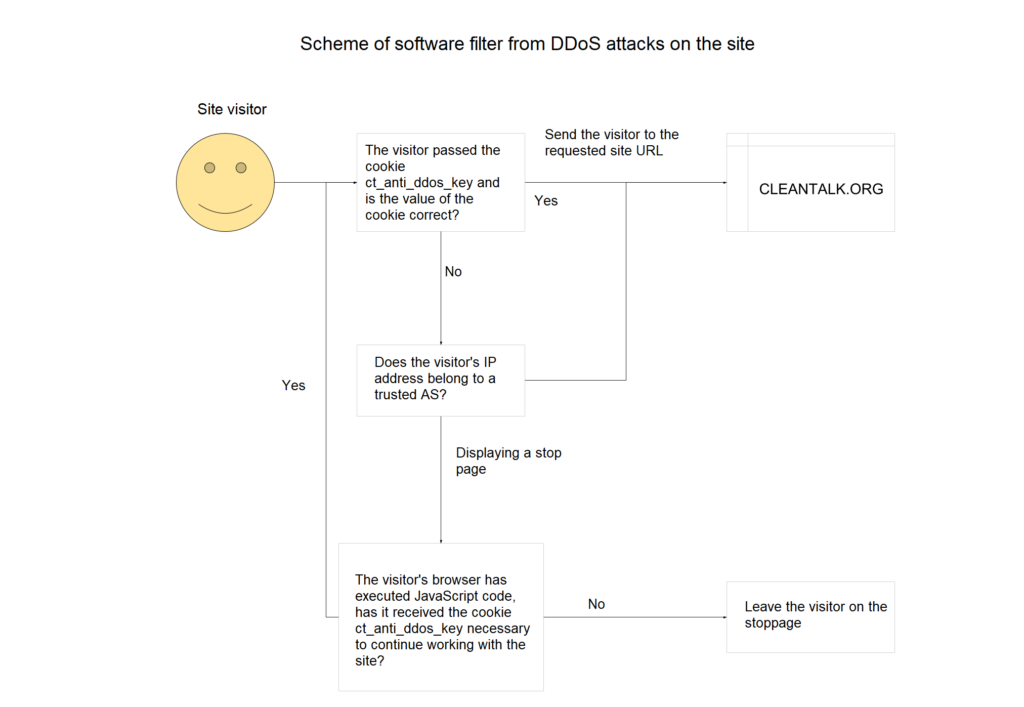

Scheme of software filter from DDoS attacks on the site

The filter is based on the fact that bots participating in DDoS attacks are not able to execute JavaScript code, so bots will not go beyond the stop page of the filter, which will significantly unload the frontend/backend and the site database. Because to process each GET/POST DDoS attack request, you will need to execute no more than 20 lines of code in the backend of the site and give the page-stub of less than 2KB of data.

- The filter is called by the first line of the web application, before calling the rest of the application code. So it is possible maximally to unload the “hardware” of the server and reduce the amount of traffic sent to the bots.

- If the visitor falls under the filter conditions, then we give the visitor a special page-stub. On the page,

- We are reporting the reasons for issuing a special page instead of the requested one

- We set the special cookie in the user’s browser through JavaScript

- Run JavaScript redirect code to the source page

- If the visitor has a special cookie, the filter transparently passes the visitor to the requested page of the site.

- If the visitor’s IP address belongs to an autonomous system from the list of exceptions, then the traffic is also transparently skipped. This condition is necessary to exclude the filtering of search engine bots.

Project filter on github.com.

Synthetic tests of the filter

We tested ab utility from Apache Foundation on the main page of the combat site, previously removing the load from one of the nodes.

Results with a disabled filter,

ab -c 100 -n 1000 https://cleantalk.org/

Total transferred: 27615000 bytes

HTML transferred: 27148000 bytes

Requests per second: 40.75 [#/sec] (mean)

Time per request: 2454.211 [ms] (mean)

Time per request: 24.542 [ms] (mean, across all concurrent requests)

Transfer rate: 1098.84 [Kbytes/sec] receivedNow the same thing with the filter on,

Total transferred: 2921000 bytes

HTML transferred: 2783000 bytes

Requests per second: 294.70 [#/sec] (mean)

Time per request: 339.332 [ms] (mean)

Time per request: 3.393 [ms] (mean, across all concurrent requests)

Transfer rate: 840.63 [Kbytes/sec] receivedAs you can see from the test results, enabling the filter allows the web server to process almost an order of magnitude more requests than without the filter. Naturally, we are talking only about requests from visitors without JavaScript support.

Application of the filter in practice, the history of saving the site from one small DDoS attack

Periodically, we are faced with DDoS attacks on our own corporate site https://cleantalk.org. Actually during the last of the attacks, we applied a filter from DDoS at the level of the website applications.

The start of the attack

The attack started at 18:10 UTC + 5 on January 18, 2018, attacked the GET with requests to the URL https://cleantalk.org/blacklists. On the network interfaces of the Front-end servers, there appeared an additional 1000-1200 kbit/second of incoming traffic, i.e. received a load of 150/second GET requests to each server, which is higher than the nominal load 5 times. As a consequence, the Load average of Front-end servers and database servers has grown dramatically. As a result, the site began to issue error 502 due to the lack of free processes php-fpm.

- Attack analysis

- After spending some time studying the logs, it became clear that this is the DDoS attack, because,

- 5/6 requests were for the same URL.

- There was no clearly defined group of IP addresses creating a load on the URL from item 1.

CPU front-end servers were loaded an order of magnitude higher than the surge load on network interfaces.

Accordingly, it was decided to include a filter of visitors to the site using the algorithm described above, additionally including in it the checking of incoming traffic through our database of blacklists, thereby reducing the probability of issuing a stop page to legitimate visitors to the site.

Enabling the filter

After spending some more time preparing the filter, it was switched on at 19:15-19:20.

After a few minutes, we got the first positive results, first Load average returned to normal, then the load on the network interfaces fell. A few hours later, the attack was repeated twice, but its consequences were almost invisible, the frontends worked without errors 502.

Conclusion

As a result, by using the simplest JavaScript code, we solved the problem of filtering traffic from bots, thereby extinguishing the DDoS attack and returning the availability of the site to its regular state.

Honestly, this bot filtering algorithm was not invented on the day of the attack described above. A few years ago, we implemented the additional function SpamFireWall to our Anti-Spam service, SpamFireWall uses more than 10 thousand websites and there is a separate article about it.

SpamFireWall was developed primarily to deal with spam bots, but since the lists of spambots intersect with the lists of other bots used for questionable purposes, the use of SFW is quite effective, including for stopping small DDoS attacks on the site.

About CleanTalk service

CleanTalk is a cloud-based service for protecting websites from spambots. CleanTalk uses protection methods that are invisible to the visitors of the website. This allows you to opt out of methods of protection that require the user to prove that he is human (captcha, question-answer, etc.).